LLVM from beginner to tomb(keeper) in 2025

LLVM (Low Level Virtual Machine) is a target-independent optimizer and code generator, according to the description of LLVM’s wikipedia. Interestingly, LLVM can serve multiple purposes, but it is rarely regarded as a Virtual Machine project, despite its prominence. Although LLVM developers should have a strong grasp of Compiler Principles, if we view LLVM as a program analysis tool, all we really need is an understanding of how its APIs work. This post will walk you through writing a simple, toy-level LLVM Pass in 2025, step by step.

First of all, build the LLVM Project

There are two main ways to implement an LLVM Pass: one is to write the Pass within LLVM’s library source code (the official tutorial) and compile it with the opt binary; the other is to develop it out-of-source (a GitHub repository describes this method) and compile it independently. After reading countless old blogs and tutorials through Google searches, I’ve finally admitted that LLVM’s official tutorial is by far the most helpful of all the resources I’ve found 🤖. So we will follow the official site’s tutorial to build LLVM step-by-step.

First we clone the LLVM repo from the official project URL on a Linux distribution system, here I use Arch Linux in WSL2 btw 🤓.

1 | git clone --depth 1 https://github.com/llvm/llvm-project.git |

We use the latest version’s LLVM, then start to build the LLVM project.

1 | cd llvm-project |

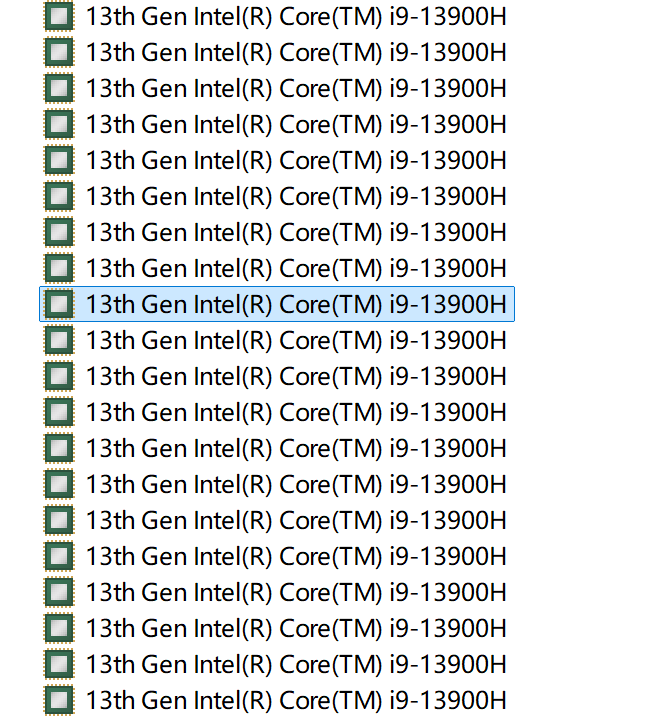

If you don’t have clang compiler on your system, you need to add clang project through LLVM_ENABLE_PROJECTS variable, or you can write the LLVM IR manually. This could cost a lot of time cause the compiling speed depends on your CPU performance generally. In my circumstance, I used about 6 hrs to compile the necessary binaries. My CPU’s specification is as below.

After a long compilation process, we’ve finally generated the target binary. Please ensure that build/bin/opt is available, as it is required for the next steps. Since clang is only needed for compiling LLVM IR, building it from source is not necessary.

Let’s start to write a baby level LLVM Pass!

The official LLVM tutorial recommends writing a Pass within an existing directory of the LLVM project and compiling it into the opt binary. This way, we can run the Pass by specifying the corresponding opt command option. Noticed the opt is already compiled with the pass named helloworld in the official tutorial, we’ll write another LLVM pass by expanding the existing code 👽.

According to the official tutorial, the HelloWorld.h header file is located in the llvm/include/llvm/Transforms/Utils/HelloWorld.h directory and the HelloWorld.cpp file is located in the llvm/lib/Transforms/Utils/HelloWorld.cpp directory. I won’t show the source code here cause it’s already provided in the default LLVM project.

First, we’ll create a header file named HelloPass.h in the llvm/include/llvm/Transforms/Utils directory with the following content:

1 |

|

The code is somewhat similar to the source code of HelloWorld.h. Next, we’ll write an enhanced version of HelloWorld.cpp, which prints the opcode name of each instruction and the address of the first faulty instruction in each basic block of the function.

1 |

|

As the API name indicates, the custom Pass prints out the name and the instruction count of every function, one instruction address of each BasicBlock and the opcode name of every instruction. We name this source file HelloPass.cpp and place it in the llvm/lib/Transforms/Utils directory. Additionally, we need to modify three files to integrate HelloPass into the opt binary.

- In

llvm/lib/Transforms/Utils/CMakeLists.txt, add the line:HelloPass.cpp - In

llvm/lib/Passes/PassBuilder.cpp, add the line:#include "llvm/Transforms/Utils/HelloPass.h" - In

llvm/lib/Passes/PassRegistry.def, add the following line:

1 | FUNCTION_PASS("hellopass", HelloPass()) |

Interestingly, the official tutorial overlooks one crucial file: llvm/lib/Passes/PassBuilder.cpp 😭. Now that we’ve completed all the necessary preparations, it’s time to rebuild the opt binary. Simply follow the previously mentioned instructions. Since the fundamental binaries are already built, using the command ninja -C build opt will speed up the compilation process.

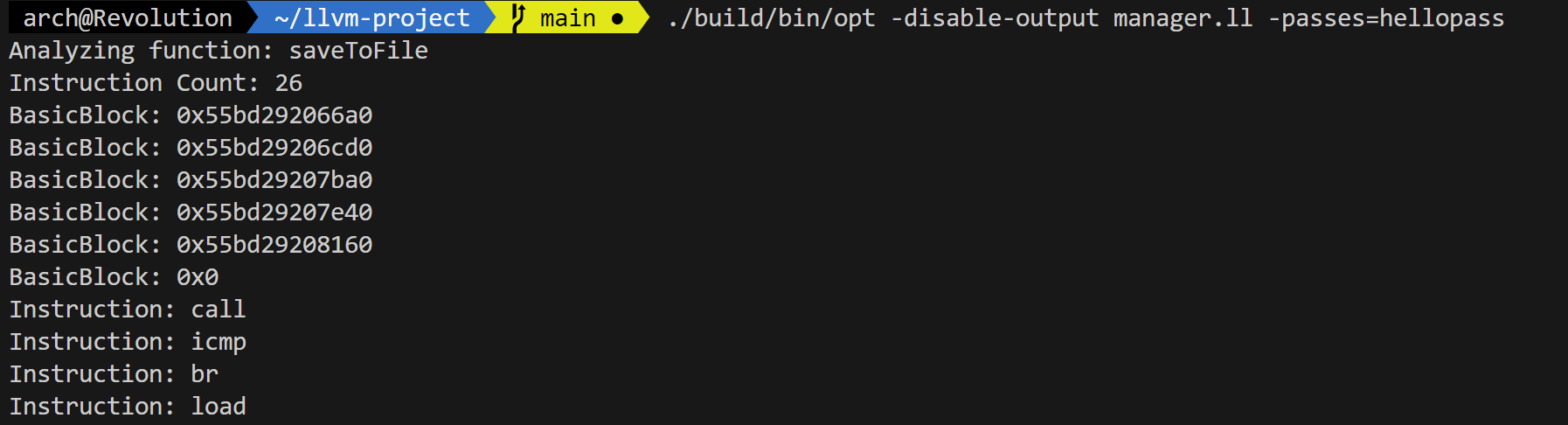

Once bin/opt is compiled, we can finally run our custom LLVM Pass! I used GPT-4 to generate a sophisticated C source file and compiled it into an .ll IR file using clang -O1 -S -emit-llvm manager.c -o manager.ll. Then, we run the Pass using the opt binary:

1 | ./build/bin/opt -disable-output manager.ll -passes=hellopass |

The output is:

Great! I successfully run my first LLVM Pass 😆.

Reasoning: What we’ve done and how PolyCruise uses LLVM

Basically, an LLVM Pass acts as a handler for LLVM IR, making it more of a static analysis tool, with the LLVM IR as the object of analysis. So far, LLVM primarily supports compiled languages like C, C++, Objective-C, Objective-C++, and Rust, which means it doesn’t cover most interpreted languages. LLVM IR is incredibly powerful, allowing LLVM Passes to fully leverage its rich analysis capabilities.

PolyCruise introduces a method to trace data flow across different languages. For compiled languages (especially C), PolyCruise compiles the code to LLVM IR, then transforms the IR into a lower-level language called LISR, and finally to Def-use format, focusing only on data flow tracing. For interpreted languages (especially Python), PolyCruise uses dynamic instrumentation to perform the data flow tracing. By combining these two methods using DIFA (Dynamic Information Flow Graph), PolyCruise can backtrack data across languages.